Too soon?

I recently had a revelation: I am disappointingly terrible at recognizing the best time to write code. There, I said it. With that enormous weight off of my shoulders, I can now begin to heal. But first, let’s rewind a few months.

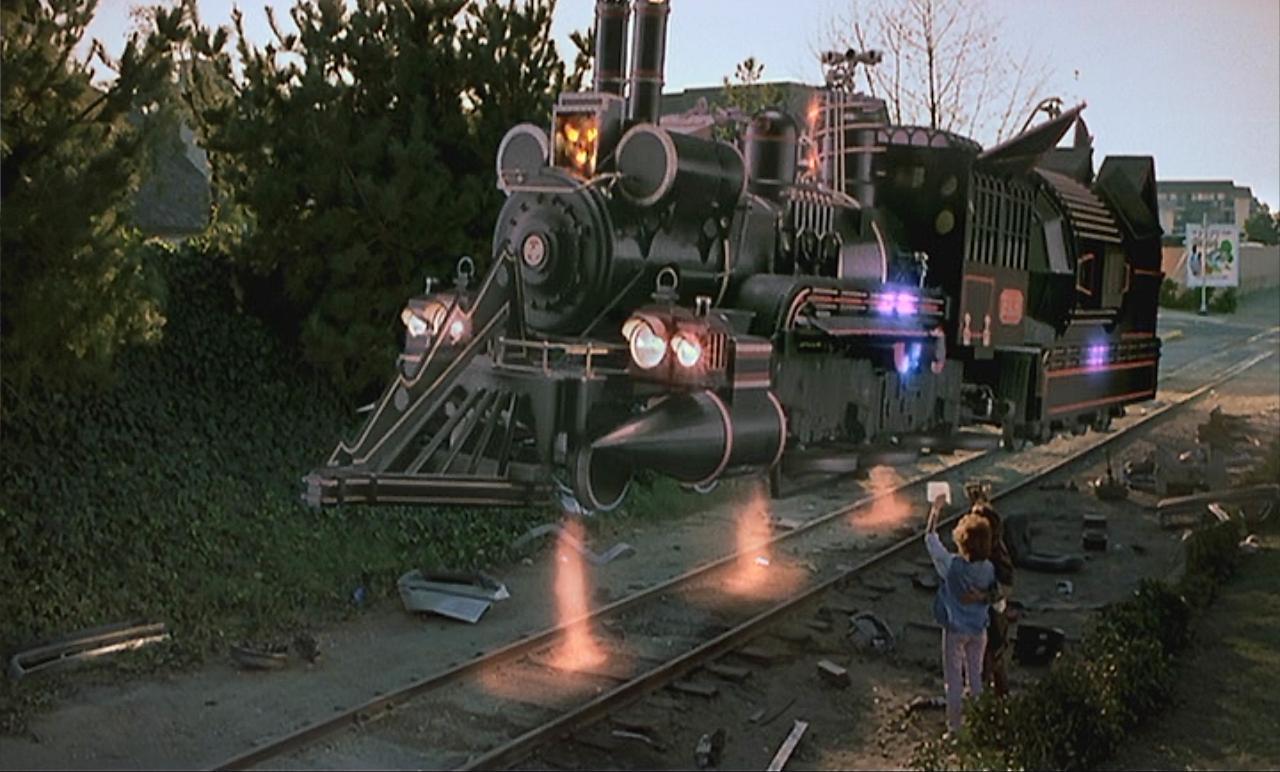

It’s early June. We’ve recently launched the first post-PoC implementation of a major project to combine two similar systems1 into one cohesive whole, with each system supporting different functionality, employing different technologies, and running in slightly different environments. I liken this project to one combining a Bombardier Global 6000 private jet with the N700 Series Shinkansen high-speed train. Sure, both are best-of-breed in their respective categories for transporting passengers, but that’s where the similarities end. The private jet is small and nimble, has a 11,000 km (6,900 miles) range, can travel at speeds of 900 km/h (560 mph) over any terrain, but has room for only 13 passengers. The high-speed train is large with room for up to 1323 passengers, but travels at speeds of only 300 km/h (186 mph) and is limited to transporting passengers over land, wherever tracks can been laid.

Building a transport that can economically transport more than 1300 passengers over any terrain at high speeds of 900 km/h (560 mph) is not an easy task. Attempts to scale up the private jet to accommodate more passengers will quickly run into limitations. The Airbus A380, the largest commercially available airliner (as of 2016-08-21), can accommodate only 823 passengers (if all available space is configured as economy class). Similarly, the high speed train will encounter limits with its top speed and terrain over which it can travel. The current land speed record (LSR) for a wheel-driven vehicle is a paltry 706 km/h (440 mph); not quite the 900 km/h we need. Not to mention that as long as the train needs to ride on tracks, traveling across large bodies of water or mountainous terrain will be prohibitively expensive.

In order to achieve our goals, a new system will need to be designed. The question becomes: do we re-engineer the private jet to scale beyond 800 passengers, do we re-engineer high-speed train to increase its speed and terrain limitations, or do we start with a clean slate? We chose to re-engineer the high-speed train and by early June, we had managed to get our locomotive to fly at high speeds, but haven’t yet managed to attach passengers cars to it.

With the inaugural launch of our flying locomotive behind us, the larger team was deep in the throes of Q3 planning. It was clear what needed to be delivered: extend the combined system to include more of the functionality found in the original systems and scale it 100×.

As one would imagine, the combined system is expected to be feature-compatible with the original systems. This introduces its own set of challenges. Consider the food preparation process on the land-based train. Weight and the various forces exerted on the equipment of the kitchen car are likely to be much different on a land-based train than on a flying train; the flying train would likely contain equipment not much different than what appears on a modern day passenger airliner. But with the need to handle twice as many passengers as the largest airliner, the equipment will have to be optimized for throughput.

Many components of the existing system were very close to their scaling limits, requiring a significant amount of re-engineering to reach our targets. This is not unlike increasing the passenger capacity of the flying train beyond the small number that can ride in the locomotive: the couplers connecting the passenger cars will need to be redesigned to handle the complications of flight, each passenger car will have to provide its own lift or the train won’t get off the ground, not to mention the use of lighter-weight materials to achieve the stringent weight requirements of powered flight. Just like our system, once all of these problems have been solved, further increasing the passenger capacity will be much less complicated (being horizontally scalable, adding more passenger cars will probably allow us to increase capacity).

After spending the better part of June updating the design of the combined system to be support more functionality and expanded scale, July began with the various teams diving into the details of the various components, answering the hard questions about how we will achieve our high-level goals on such a short timeline.

Fast-forward to today, half way through the quarter. Looking at our burn down chart, things look pretty good:

But in reality, things are far from good. One of the things that is missing from the burn down chart is how we are doing delivering features that unblock our customers vs. features that (although important) do not. For example, if we are responsible for translating the requirements from the chef into specifications for the kitchen car of the flying train, we should focus our attention on determining the weight, dimensions, and location of the equipment that will be installed in the car rather than the color of cupboards and counter tops or wrestling with decisions regarding the use of PEX, CPVC, or PP for the pipes supplying water. By installing the equipment in a car, our customers can be unblocked. For example, the chef can learn how to navigate the kitchen and determine where to optimally place utensils and organize the food. The chassis designers can verify that the their design properly distributes weight throughout the structure. The mechanical engineers can verify that the propulsion system can provide enough lift for the (extra) heavy kitchen cars. The structural engineers can ensure the couplers between cars can withstand the additional stresses caused by the additional weight of the equipment found in the kitchen cars.

I suspect some of these issues sound a lot like integration considerations. They should because that is exactly what they are!

If we only consider blocking functionality (that is, functionality that when delivered will unblock customers), our burn down chart probably looks less impressive:

So, what did we do wrong? Many things! However, I think our mistakes fell into one of two broad categories:

- Delivering the wrong thing at the wrong time

- Building abstractions too early

We are clearly (sadly?) not building a flying train. What is it that we’re building?

Our data are generated using lambda architecture. As part of the effort to scale our system 100×, our customer (another engineering team) is creating a new data pipeline for processing speed data that must be joined with data owned by my team. Our existing systems will not scale much beyond their current capacity, requiring us to migrate our system to infrastructure that will be capable of scaling to the required 100× increase in data (and beyond). At a high level, our system is structured as follows:

We have new data arriving constantly. One copy of the data is written to storage (the master dataset) that our batch materializer processes daily, generating the serving data view. A second copy of the data is written to the speed data processor that updates the speed data view. The λ-service ties both of these views together with a simple API for querying the data. It is responsible for fetching data from both the speed and serving views and merging them into a combined view. This is pretty standard lambda architecture. Our customers’ interaction with the system is limited to the λ-service.

Delivering the Wrong Thing

If you were to ask past-Michael what order these components needed to be delivered, he would have probably answered: it doesn’t matter because the system isn’t correct until everything is available. For much of my career, I have had few customers that were other engineers. My team was usually delivering some new feature that was either shippable (met all the requirements) or not (did not meet all the requirements); integration wasn’t a thing. Shipping a feature meant adding it to what would eventually become a release candidate that would undergo extensive testing before being shipped to external customers. In some cases, this also meant waiting several months until the next release cycle. Being late did not impact other teams; the features they were adding were usually for a different product or otherwise orthogonal to what my team was delivering. Even within a team, engineers were often working on different projects, requiring no intra-team integration. In these situations, the order in which functionality was implemented was not critical because a feature was not shippable until all basic functionality was complete2.

Consider our lambda architecture for a moment. Our customer interacts exclusively with the λ-service. The details of how our speed and serving views are materialized is of little consequence to our customers. Sure, their presence will impact the quality of the results being returned, but that should not have a material impact on integrating our systems together or performing end-to-end testing. The λ-service is a linchpin component in our project.

Instead of working on building a λ-service with minimal functionality, we first focused our attention on speed and batch layers. The batch layer was mostly available as a result of previous projects, requiring little work to deploy the serving view for consumption by the λ-service. The speed layer, by contrast, needed to be written. Almost two weeks in, after we had made a some progress on the speed layer, our customer was excited to start integrating with the λ-service. Because of our poor choice of what to work on, integration was delayed by several weeks. Aside from the disruption to the integration testing, this was also a disruption to the team; we had to stop working on the speed layer and change gears to start working on the λ-service. By the time we got back to working on the speed layer, it took a few days to recover from the disruption.

Why did this happen? One problem is failed to do is ask ourself this simple question:

What must I deliver to ensure my customer is not blocked (on me) and when must I deliver it?

In this case, the answer seems simple: deliver the λ-service with which our customer can integrate. But what does this mean? What functionality must the λ-service provide? Support for the speed view? Support for the serving view? Support for both? As you can see, this is not always an easy question to answer and will likely involve discussions with your customer. They likely have plans to provide incremental value of their deliverables and coordinating with them will increase the probability that both of you will deliver value. Having said that, the following general techniques may be helpful.

Publish the API as soon as possible

When integrating with your component, the first thing your customer will want to do is write code that accesses your component. By publish the API early, without an implementation, your customers will be afforded an opportunity to take it for a test drive. The earlier this is possible, the earlier you can be certain the API is appropriate for your customers (remember, APIs should always favor being easy for customers to use, even if that is at the expense of being hard to implement). Since there is no implementation, the cost of change is low at this stage. A well-designed API will provide an appropriate abstraction and a clean separation of concerns between components. This is the best time to test whether your API meets these criteria.

For our project, publishing the λ-service API should be done before writing the implementation. This is the only part of our service that is exposed. An astute reader would have noticed that there are internal APIs, such as the format the speed and server data views are stored. These should be handled in the same way as external APIs and should be published before wring any code that will use them (in this case, read/write data from/to data stores). Although we didn’t start working on the λ-service at the right time, we published the APIs early on when we did start working on it. This allowed us to identity limitations in the API and correct them before writing any code behind it.

Create a simple stub implementation

Now that the API has been decided and your customer has written some code, they will soon want your API to start providing valid responses. This can be achieved by delivering a stub implementation that will allow your customers to interact with the service as if it were complete. For services, this would also involve deploying (perhaps in a staging environment) a service that exposes your stub implementation. Depending on your API, this might mean deterministically returning canned results whose value depends on the associated request. This simple stub implementation will facilitate the integration testing with your component. The earlier this can be performed, the more time will be available for uncovering and fixing issues.

For our project, deploying a stub λ-service implementation was completed once the API was finished. The stub implementation generated fake data that was deterministic for a given request. This completely unblocked our customers from most of their end-to-end testing (due to the nature of the data, certain scenarios could not be tested with the generated data). Although we didn’t start working on the λ-service at the right time, we quickly deployed a stub service when we dis start working on it. This allowed us to unblock our customers as they were able to test there systems against our services, performing integration early on in the development process.

Provide functionality through short, incremental iterations

Now that the stub service has been deployed and basic integration testing can be done (and in some cases, a stub can facilitate a substantial portion of the integration testing), functionality should be added through short iteration cycles. This is perhaps one of the harder parts of the process as it involves decomposing your service and identifying which components provide value on their own; something that might not seem obvious at first. Once the functionality has been carved up, it should be delivered in short iterations, with incremental benefit delivered after each iteration.

For our project, there are two obvious stages into which we could split the λ-service implementation: serving and speed views. As mentioned above, the batch data processing was mostly complete, so supporting the serving view would be relatively easy. Since batch data is processed daily, this would provided access to the full dataset with a 24-hour lag; a huge benefit with a relatively small amount of work. The speed data is much more complicated, and only provides a small incremental value. Support for speed views could be further divided into two sub-deliverables: ingestion and retrieval. Once ingestion is supported, retrieval can be implemented by merging the speed and serving results together. In our particular system, there are a few related datasets accessed through our service, not all of which are required to provide the majority of the functionality to our customers. By further splitting the iterations into cycles by the data they provide, we can deliver more value to our customer sooner. For example, we could first support serving view of data X, then speed view of data X, then serving view of data Y, and finally speed view of data Y.

We failed at this by not having any iterations, attempting to deliver functionality for the complete λ-service within a single iteration. This has blocked our customer as they have been unable to fully test their service.

Building Abstractions too Early

If you were to ask past-Michael what he thought of building abstractions, he probably would have responded: Build them early and often. Write code that can be easily extended when new functionality is introduced. I also suspect he would have chuckled at the suggestion that an abstraction could be built too early. Perhaps you are also suspicious of such claims. Perhaps present-Michael can assuage your concern.

The biggest problem with building an abstraction too early is that it delays your ability to deliver value. Consider our project to build the λ-service. Although we had unblocked our customers by completing our simple stub implementation, this was only temporary as we would soon block them because our λ-service didn’t start returning real data. Since the data for the serving view is already available, it made sense to first support retrieving that data only, leaving support for retrieving data from the speed view to a future iteration.

The allure of building an abstraction at that point, knowing that support for retrieving data from the speed view would soon follow, was overwhelming. The code had to be written anyway, why not do it right away? We could build an abstraction to support data from both the speed and serving views and supply a null storage object (that always returns no data), but this would require implementing the complicated merge code now. Code that we don’t need. Cruft that would be slain if discovered. This code is unlikely to be simple. How do we handle retrieval errors? Partial results on batch gets? Even if all the data is successfully retrieved, how do we resolve conflicts in the merged data? Does the serving view win? Does the speed view win? Is it context dependent? These are all things that must be considered, coded, tested, reviewed, debugged. All that work for no added value because we’re not retrieving any data from the speed view.

By eliminating the abstraction and implementing functionality as if it were not going to be extended, value can be delivered quickly. The introduction of abstractions can be delayed until the functionality is extended. Whatever abstractions are introduced, they should be limited to only what is necessary to supply the needed functionality. Put another way:

Write the bare minimum amount of code necessary to get the job done, but no less.

In the context of our project, the best way to provide value introducing support for serving view data is to write the code as if only serving view data will ever be supported, completely ignoring speed view data. Once this functionality has been deployed and we move on to adding support for speed view data, serving view data, we can introduce an abstraction that simplifies the support of speed view data. The key to this second step is the proper employment of refactoring. If each bit of functionality is introduced without appropriate refactoring, the code will evolve into a jumbled mess. At each stage, leave the code in such as state as to appear as if all functionality was know when implemented. When we add support for merging in the speed view data, the code should look as if it were initially written with support for merging data from the speed and serving views. This is such an important concept that it deserves a post of its own.

I learned a lot of hard lessons over the past few weeks. Lessons that, in retrospect, I should have learned many years ago. I can think of several projects that were unnecessarily delayed due to my inclination to only deliver complete solutions. If I were to distill what I have learned during this process into one concept for you to remember, it would be:

Always be working on the feature that provides the most value to your customers.

Caveat: This may not be the feature they think they need. Building infrastructure that will make you and your team move faster may provide the most value to your customer.

-

One of these systems came as part of the acquisition that brought me to this company while the other was the company’s existing implementation. ↩

-

In practice, it’s not quite as simple as this. Being able to deliver a functional, but incomplete, feature is beneficial as it opens many doors, including:

- Early access to new features to get valuable, early feedback from customers.

- Scaling back the scope to meet a deadline (rather than not shipping at all).